Markov chains are definitely one of the more interesting and fun sets of statistics you can play around with. But they are excellent in the case of text generation. So what are Markov chains and how do they work? And how can we use them to make text?

Simple Markov Chains

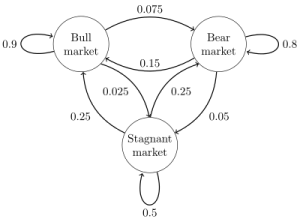

Markov chains explain the probabilistic relations between different states. States are simply different situations or values of an object or scenario. For instance, a potential set of states for a person might have “sitting”, “standing”, “running”, “talking”, “laughing”, and “crying” as possible states. A Markov chain describes the probability of moving from any one state to another state, including moving from one state to itself (i.e. moving from “sitting” to “sitting”). In a simple 2-state Markov chain, there are 4 total potential transitions: 2 that describe moving from one state to the other, and 2 that describe moving from one state to itself. The main use of such Markov chains comes into play when we relate the probability of these different movements. For example, these states may be more likely to move to themselves than to switch from one to the other.

Now, the way that these probability relationships are described mathematically through transition matrices isn’t really important for our understanding of Markov chains. What is important is that you understand the basic ideas of a Markov chain having different states, related by probabilities of moving through these states. If you need some more visual or interactive understanding, I highly recommend this page (Markov chains: A visual explanation) made to explain Markov chains by Victor Powell and Lewis Lehe.

Yes, We Can Make Text With This

It may be strange to consider, but we can actually implement these statistical properties to generate text. Unlike in creating text by a table (insert link), there isn’t much formatting necessary for this kind of text generation. Just make sure the source text is placed in the proper location for your bot to look at and you’re good to go. But how does this actually work?

Well, the bot will analyze the source text word by word, looking at not only the individual words but also what words follow them. Each of these words will occupy a state in the Markov chain. Add in some probability of what the starting word will be, and you get a Markov chain capable of generating some semi-coherent text. It’s pretty difficult to provide visual representations in this case, but hopefully, this can at least help:

This Markov chain visualization shows a state diagram between different markets, as well as their probability of change. Imagine, instead, this was a state diagram of three different words. This diagram would show the likelihood of one word to be followed by another. It isn’t necessary to fully understand the statistical relationship between the states here. The key concept is to understand that Markov chain text generation creates text by using probabilistic data to determine what words are likely to follow other words.

I just wanted to make text, man, not all this math stuff

The good news is that most of the math is irrelevant for our uses. So long as you understand the key concept, you can implement Markov chain text generation. So now, the question is, what kind of source material do you want? This form of text generation has a tendency to emulate its source material, so often the more unique your source, the more recognizable and coherent the output will be. Typically, the measure of success in creating interesting Markov chain text generation is the volume of the source material. If you want to emulate Edgar Allen Poe, it’s going to be difficult with only a single poem.

The problem that arises because of this need is that it is difficult to import large amounts of external text if it is not already in a digital format. You can try and type Moby Dick into your source, but it’s not the most practical idea. This is where things like Project Gutenberg come in handy. Project Gutenberg is an online collection of books and texts available for free. Many of the books are fairly old, as these are available outside of copyright. But the project is completely cataloged and searchable, allowing access to over 54,000 ebooks, so chances are that you can find some sort of interesting text to use. Be careful, though, and ensure that you have the proper rights to use the text for your bot.

Wrapping this up

This is a bit of a shorter post, as there isn’t much to delve into here. We’re not really going to get into how to code these sorts of things, but we will definitely be getting into code soon. The main purpose of this post is to familiarize you with Markov chains and their application within text generation, which we will later show you how to implement into a Twitter bot. Keep an eye out for our next post about Tracery, an excellent grammar tool for use in making bots. Leave any questions in the comments below or tweet us @DH_UNT.

Leave a Reply